This page is for institutions involved in the tracker 2017-18 pilot. Most of these questions are also answered in the Guidance for Institutions but are maintained here as a quick source of help. if you don’t find what you’re looking for here, please check out who can help?

- Why should we use the tracker?

- How much time and resource will we need to commit?

- When should we run the tracker?

- Can we use the tracker with other surveys?

- Does the tracker assess learners’ digital capabilities?

- Does the tracker support learning analytics?

- Where can we see the actual questions?

- Why can we only customise some of the questions?

- Why are there separate questions for different sectors?

- How have the questions been developed and updated?

- Is the tracker mainly about online learning?

- How do we run a prize draw?

- What support will there be for using BOS?

- How will we distribute the tracker to our learners?

- What can we do with the data from the tracker?

- Can we publish reports about our use of the tracker?

Why should we use the Tracker?

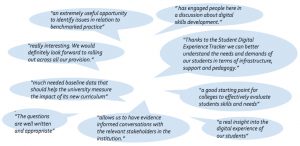

Many universities and colleges recognise that the digital experience is important to their learners, but until recently there was no way of finding out about it reliably or in any detail. We developed the tracker to meet this need. Click on the image to read what pilot users said about the benefits in their institutions.

Some key reasons for using the tracker are:

- Holistic approach, with questions on teaching, learning and private study habits, feelings about technology use and digital confidence, as well as digital access and support.

- Expertly designed and tested survey tool. The survey has been successfully completed by over 40,000 students.

- Evidence based. The question set covers some of the seven challenges for institutions identified in earlier Jisc studies and validated with student groups. Our rigorous evaluations of the pilot process have continuously refined the questions used.

- Actionable findings. Questions are only included where pilot institutions were able to frame a practical response to the findings.

- Ease of use. The survey is implemented in BOS (Bristol Online Surveys), a user-friendly service developed for the UK education system and now owned by Jisc.

- Customisable. You can customise and/or author a whole page of questions. This means you can consult learners about current concerns at the same time as collecting nationally-recognised data, without having to carry out two separate surveys.

- Benchmarking. In addition to all the analytical features of BOS you are provided with benchmarking data, comparing your learners’ responses with other learners across your sector.

- Step by step guidance. Our detailed guides have been developed and refined in practice. They cover not only technical implementation but issues such as ensuring high levels of completion, understanding your responses, analysing your findings alongside other sources of data, and working in partnership with learners to improve their digital experience.

Back to FAQs about the Tracker

When should we run the tracker?

You will receive your unique survey URL in October 2017 and you can run the tracker at any time between then and the closing date of 30 April 2018. We chose this time period because most learners are well established in their course of study and so have something to say about their experience, and because universities and colleges told us that this was a good time of year to find a space free from other surveys or exams. We recommend opening the survey for a two week period initially, with a deadline, but extending the deadline if you find that your numbers are continuing to build.

There is more about encouraging learners to participate in our Guide to Engaging your Learners, which you will find on the Guidance page.

Back to FAQs about the Tracker

How much time and resource will we need to commit?

The tracker process can be managed by a single person, who will submit the confirmation form (20-40 minutes), customise the survey in BOS (40 minutes or more, depending on how many additional questions you decide to use) and launch the survey to learners. How much time you put into securing participation and engagement is up to you. However, we strongly recommend that you work with other stakeholders from the outset to ensure that the findings are taken seriously and there is a collective organisational response. You will also need to devote time to data analysis and understanding what the findings mean. The Guide: planning to use the tracker includes a resources planning table – available on the Guidance for Institutions page – which provides more detail.

Back to FAQs about the Tracker

Can we use the tracker with other surveys?

In general we encourage you to use the tracker alongside other data collection activities. For example, you will have some general evidence about learners’ digital experiences from national measures such as the NSS, student barometer or UKSE in HE, or SPOCS and Ofsted Learner View in FE and Skills. You may well have run local surveys or focus groups to help you gain learners’ perspectives on certain systems. The tracker allows you to collect more detail on some of these areas while keeping a broad view of what the digital experience means. Our Guide to analysing your data suggests ways that you can use findings from the tracker alongside findings from other sources.

You can now include a small number of additional questions at the end of the tracker survey so you do not have to design and run a separate survey. You can then use the native BOS analytics (or your own preferred data analysis system) to explore how responses to customised questions relate to others in the tracker. You will not of course be able to benchmark any unique questions with any other institutions.

You won’t be able to embed the tracker or any part of it into your own survey system and expect to benchmark your findings. Benchmarking is only possible within BOS as part of the tracker process. BOS also guarantees the security of your institutional data.

Back to FAQs about the Tracker

Does the tracker assess learners’ digital capabilities?

No. The tracker is not an assessment tool for individuals; it is designed to help you understand how well your organisation is meeting learners’ needs overall. Jisc is developing services in the area of digital capability which will include a discovery tool to assess learners’ digital aptitudes in more detail, and will give individual feedback to support their development needs.

Back to FAQs about the Tracker

Does the tracker support learning analytics?

Yes. You now have the facility to use unique identifiers with the tracker so that you can easily associate tracker responses with other learner data if you wish. There is more about this in the Guide to using the tracker in BOS.

Back to FAQs about the Tracker

Where can I see the actual questions?

You can access the question sets as pdf downloads from the Guidance page.

Back to FAQs about the Tracker

Why can we only customise some of the questions?

The question sets have been developed for use by a diversity of learners across a wide range of institutions. The benefits of using the same set of questions are many.

- You can compare your data with other providers across your sector.

- These questions have been piloted and optimised for accessibility and usability. You can be confident that learners will understand and answer them reliably.

- The tracker covers the issues we have found to be most important to learners and most actionable in practice. We don’t believe in asking learners for feedback unless there is a good chance it will be acted on.

- The questions are designed to be stable over time, allowing you compare results year on year.

Some questions need to be customised for your institution, in particular the name of your VLE and the way you partition learners into groups for comparative analysis, e.g. by course or broad subject of study, stage of study, place of study etc. You also have the option to add a small number of your own questions. There is detailed information in the Guide to customising your tracker, available from the Guidance page.

Back to FAQs about the Tracker

Why are there separate questions for different sectors?

In fact the different sets of questions are almost exactly the same, apart for some differences of language. This was the preference that emerged from our pre-pilot consultation events. The slightly different questions for online learners, for learners studying in Adult learning and Skills sector, and for apprenticeship/work-based learners reflect the fact that they are unlikely to be studying on a campus.

You receive the question set(s) you select. You can choose to run two different trackers at the same time, e.g. for online learners and for mainstream HE learners. If you do this, our guidance can help you to pool the data from the different surveys, and/or to compare the two.

Back to FAQs about the Tracker

How have the questions been developed?

The questions included in the tracker surveys evolved from the work of the Digital Student project including the 7 Challenge areas and the Jisc/NUS Digital Student Experience benchmarking tool. During the consultation phase we selected and refined those questions that were popular with both learners and managers. Then in user testing we further refined the question set to make it simple to understand and quick to complete. The question set for the first pilot was extremely concise, which contributed to the high response rates achieved at many of the 24 pilot sites. However, feedback suggested that we had missed some important issues. The question sets for the current pilot were refined after factor analysis of the 2016-17 data and extensive evaluation with users from that cohort.This question set is longer and more detailed than the one we first delivered in 2016.

Check our blog for current details of the research associated with the tracker.

Back to FAQs about the Tracker

Is the tracker mainly about online learning?

No. We use the ‘student/learner digital experience’ to mean all experiences that learners have with digital technology, which might be in a classroom setting, online, or outside of their course of study. If you are particularly interested to understand the experiences of online learners, you can use our specialist online Tracker.

Back to FAQs about the Tracker

How do we run a prize draw?

Prize draws are a great way to boost participation. You can now use p.9 in the tracker survey to collect email addresses from learners and randomly select winners from responses to this question. Responses to this question should not be used for any other purposes and should be removed from the data set once the prize has been given. Include details of the prize(s) in all your communications with students.

Back to FAQs about the Tracker

Will we have support for using BOS?

It can be helpful to have some BOS experience in your team, but the interface is designed for educational users, is easy to navigate, and includes a ‘help and support’ button at the top right hand side of most screens. The online manual is here: https://www.onlinesurveys.ac.uk/help-support/. There is a comprehensive Guide to using the tracker in BOS available from the Guidance page with screenshots and walk-throughs, and a tracker support email address for technical problems.

Back to FAQs about the Tracker

How will we distribute the Tracker to our learners?

When you are ready you will launch the Tracker survey from the survey launchpad in BOS. You can then distribute the public URL in whatever way you decide will work best with your learners. Our Guide to engaging learners has advice on achieving a good take-up and completion rate. Alternatively you can use survey access control functions in BOS to set up a respondent list, and send invitation emails to individual students containing personalised URLs. This is particularly recommended if you already use respondent lists in BOS for other surveys. There is more about distributing surveys in the BOS manual at: https://www.onlinesurveys.ac.uk/help-support/sharing-your-survey/.

Back to FAQs about the Tracker

What can we do with the data from the tracker?

Your institutional data belongs jointly to your institution and to Jisc. Once you have downloaded data from BOS for analysis locally, you are responsible for managing it in accordance with the data protection policies of your institution. Jisc has produced guidance on how we will use and manage the data that we hold. Unless you opt out, your data will be made available to your Jisc Account Manager for you to discuss together. You may decide to share aggregated data with specific other institutions for the purpose of direct comparison, but this is for you to decide and arrange in accordance with your own data protection and partnership policies.

Back to FAQs about the Tracker

Can we publish reports about our tracker data?

Yes, you can publish reports about aggregated data from the tracker so long as you ensure complete student anonymity, and in line with your institution’s data management policies. Be aware that you will probably need to complete your organisation’s research ethics process if you do plan to use data from the tracker to publish and present externally. This is usually not necessary if you only plan to write internal reports and use the tracker data to develop internal recommendations.

We ask that Jisc is acknowledged as having provided the survey instrument and support for its use. If you are producing an academic paper or presentation that draws on your tracker findings, please refer to the survey itself as: Beetham H. and Newman T. (2016) Digital Student Experience Tracker [Survey instrument]. Jisc. Do let us know about any publications based on the tracker so that we can share and promote them.