If you’ve been following the 2017-18 tracker you’ll know that we’ve released a version of the new question set (HE) for you to review in BOS. You can also download a pdf file of these questions to share with colleagues. The FE questions use ‘college’ instead of ‘university’ but are otherwise the same. The questions for ACL, skills-based and online learners have some minor differences in the wording and the options, particularly to account for the fact that many learners will not be attending on campus. But they are as similar as possible to the other question sets to support valid comparisons across sectors and modes of learning.

If you’ve signed up for the tracker this year you’ll soon have access to the master surveys you requested so you can copy and customise them for your students. If you haven’t, we hope that a review of the questions will convince you that that the answers will be useful. Guidance is also being completely updated to help you through the process and give you more ideas for engaging students. You can still sign up for this year’s tracker here.

If you used the tracker last year you will notice a few changes to the question set, and the rest of this blog post describes what they are and why we’ve made them. If you are interested in the tracker as a research tool or if you (or your organisation) want evidence of the research that has gone into its design, this post is also for you. Please comment on our thinking at the end – we are always keen to have this conversation!

Structure and navigation

We’ve grouped the questions slightly differently this year for a better flow. Each section is colour-coded so that students can see ‘where they are’ in the question set and broadly what their answers are about.

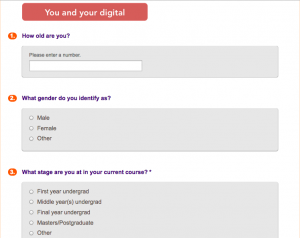

You and your digital

The first section includes the fixed demographic questions (these can’t be customised) and a question about use of personal devices for learning. This has been split off from the question about institutional devices for learning, as last year we found that BOS displayed the two results in a confusing way. The question about stage of course can’t be customised so that we can look for any course-stage effects across the data. Please note that the options here are different for the different sectors.

We’ve kept the question ‘In your own learning time, how often do you use digital tools or apps to…’ do various activities, plus the free text question nominating a favourite app for study. These were popular with organisations and informative to us. However, we’ve lost the self-assessment questions about basic digital skills. Students’ judgement of their own skills was so overwhelmingly positive that responses did not provide any useful differentiation, and it is not obvious in any case how institutions should interpret the results. We think the student Discovery Tool will do the job much better, at a greater level of detail, and with more actionable feedback for individual users (more information from this link).

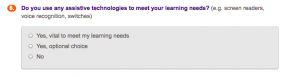

The questions on assistive technology, very slightly modified, appear in this section too.

Digital at your uni (college)

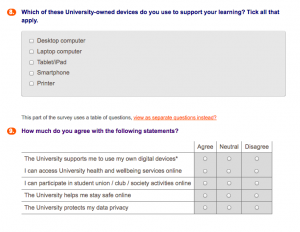

This is where we now ask about access to digital services (Wifi, course materials, e-books and -journals, file storage) and about students’ use of institutional devices.

Questions about university/college provision have been grouped together and a new prompt has been added: ‘The university/college supports me to use my own digital devices’. Like last year we ask who students turn to for help with their digital skills. We’ve kept the opportunity for students to tell universities/colleges ‘What one thing should we DO’ and ‘What one thing should we NOT do‘ to support their digital learning experience.

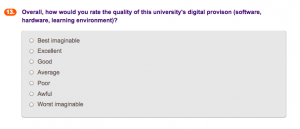

Finally, in this section we ask the first of two new questions about satisfaction: ‘Overall, how would you rate the quality of this university’s digital provision?’ (see below). The second question, further on in the survey, asks ‘Overall, how would you rate the quality of this university’s digital teaching and learning?’

Both questions use the seven-point ‘System Usability Scale’, which has been shown to pick up relatively subtle changes over time and is supported by recent research. We’re particularly excited to introduce these items to the tracker. We hope they will stand as two key metrics that can be used to assess the impact of different organisational initiatives over time (see our recent blog post on the organisational data we are now collecting).

Digital on your course

This section starts with a question about the regularity of different course activities, which last year’s pilot sites found informative and which gave us some great headlines for the overall report. We have slightly changed the order and wording to improve reliability of interpretation. After this comes the question set about the virtual learning environment that was optional last year but so widely used that we now recognise it is core to the digital learning experience. You’ll still be able to add in the name of your own VLE, as we realise this is how most students will know it.

The following two sets of questions bring in some completely new issues. These are based on the same research we carried out to inform the design of the second sign-up form.

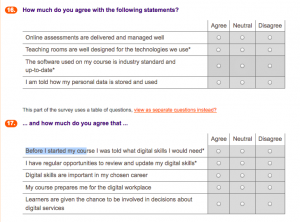

Teaching spaces (‘Teaching spaces are well designed for the technologies we use’). There is plenty of evidence that this matters to the overall digital learning experience, and it will be interesting to compare the answers of students with the judgements of staff.

Course software (‘The software used on my course is industry standard and up to date’). We find that this is important to many students and again we are asking organisational leads to assess the quality of course provision.

Digital skills (‘I have regular opportunities to review and update my digital skills’; and ‘Before I started my course I was told what digital skills I would need‘. These are based on research findings about students’ readiness to learn, digital fluency, and the reinforcement of digital skills through regular, meaningful practice.

The second overall satisfaction measure appears at the bottom of this page.

Attitude to digital learning

This page includes two sets of positive and negative statements about digital learning, identical to last year’s pilot because of the very strong validity of the response data and the popularity of the questions. There are also two new questions:

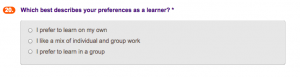

The question about preferences for individual or group work was trialled with online learners last year but this year makes it into the main question set. The second question was included because the query ‘what one thing should we NOT do’ last year elicited many similar answers, along the lines ‘(don’t) increase the amount of digital learning (relative to f2f)’. A smaller but important number of learners responded ‘(don’t) take away the digital services we have. Overall there was much higher agreement with prompts about relying on digital learning than about the enjoyment of digital learning, and we want to help organisations untangle what learners really feel about the amount of digital learning they do.

And finally…

There is now just one page of customisable questions and more freedom to add/substitute questions of your own design. We’ve included a recommended question type if you are less adventurous or if you just want to keep the user experience smooth. New guidance on customisation will be available very shortly from the guidance page. This page is also where you can use the option to add a hidden question and upload student email addresses, triggering separate emails to them for survey completion. More about this in my next post.

For more about the tracker project contact Sarah Knight or (if you are already signed up and experiencing problems) Tracker Support.